clickhouse实现统计实时排名功能

温馨提示:

本文最后更新于 2023年03月14日,已超过 1,051 天没有更新。若文章内的图片失效(无法正常加载),请留言反馈或直接联系我。

商品搜索统计

商城商品名,搜索成千上万,如何才能实时的获取到当前搜索热度最高的词,或者某一个搜索词排在第几呢?

clickhouse

ClickHouse 是俄罗斯的 Yandex 于 2016 年开源的用于在线分析处理查询(OLAP :Online Analytical Processing)MPP架构的列式存储数据库(DBMS:Database Management System),能够使用 SQL 查询实时生成分析数据报告。ClickHouse的全称是Click Stream,Data WareHouse。

clickhouse可以做用户行为分析,流批一体

线性扩展和可靠性保障能够原生支持 shard + replication

clickhouse没有走hadoop生态,采用 Local attached storage 作为存储

准备工作

mysql搜索日志表

CREATE TABLE `search_log` (

`id` int NOT NULL AUTO_INCREMENT COMMENT 'logId',

`keyword` varchar(255) COLLATE utf8mb4_general_ci DEFAULT NULL COMMENT '搜索词',

`createTime` datetime DEFAULT NULL COMMENT '搜索时间',

PRIMARY KEY (`id`),

KEY `createTime` (`createTime`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8mb4 COLLATE=utf8mb4_general_ci;

go测试数据添加

package main

import (

"database/sql"

"github.com/brianvoe/gofakeit/v6"

_ "github.com/go-sql-driver/mysql"

"log"

"math/rand"

"time"

)

type SearchLog struct {

Keyword string

CreateTime string

}

func getDb() *sql.DB {

dsn := "test_goods:xSGFwEXdXbY2GhZG@tcp(127.0.0.1:3306)/test_goods?charset=utf8mb4&parseTime=True"

db, err := sql.Open("mysql", dsn)

if err != nil {

panic(err)

}

return db

}

func main() {

for i := 0; i < 100; i++ {

for true {

searchData := make([]SearchLog, 0)

for j := 0; j < 500; j++ {

searchLog := SearchLog{

Keyword: randGoodsName(),

CreateTime: randTime(),

}

searchData = append(searchData, searchLog)

}

saveData(searchData)

searchData = searchData[0:0]

}

}

}

func randGoodsName() string {

// 生成一个长度在 3 到 50 之间的中文商品名称

gofakeit.Seed(time.Now().UnixNano())

name := gofakeit.Name()

for len([]rune(name)) > 50 || len([]rune(name)) < 3 {

name = gofakeit.Name()

}

return name

}

func randTime() string {

// 生成一个 2023 年 1 月 1 日到 2023 年 4 月 30 日之间的随机时间

start := time.Date(2023, 1, 1, 0, 0, 0, 0, time.Local).Unix()

end := time.Date(2023, 4, 30, 23, 59, 59, 0, time.Local).Unix()

randTime := time.Unix(rand.Int63n(end-start)+start, 0)

// 判断当前时间是否在白天(6:00-18:00),如果是,则再随机生成一次时间

if randTime.Hour() >= 6 && randTime.Hour() < 18 {

randTime = time.Unix(rand.Int63n(end-start)+start, 0)

}

return randTime.Format("2006-01-02 15:04:5")

}

func saveData(searchData []SearchLog) {

log.Println("开始保存数据")

db := getDb()

defer db.Close()

// 保存数据

// 插入多条数据

sqlStr := "insert into search_log (keyword,createTime) values"

vals := []interface{}{}

for index, row := range searchData {

if index == len(searchData)-1 {

sqlStr += "(?,?)"

} else {

sqlStr += "(?,?), "

}

vals = append(vals, row.Keyword, row.CreateTime)

}

_, err := db.Exec(sqlStr, vals...) // vals...: 解构

if err != nil {

log.Println("发生错误,重试:" + err.Error())

saveData(searchData)

}

log.Println("1000条处理完成")

}

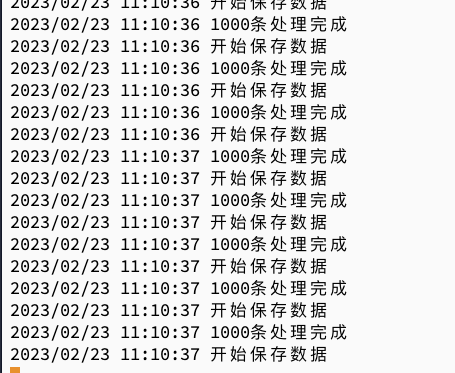

运行go:

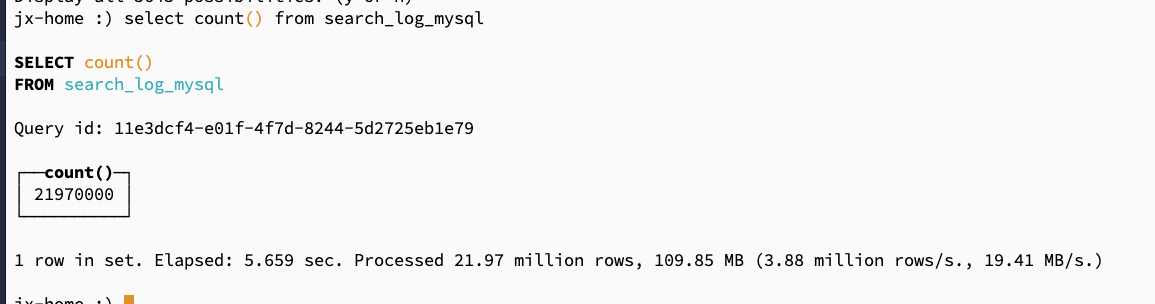

添加 2000万数据测试看看

clickhouse映射mysql数据

创建一张mysql来源的表

create table search_log_mysql(

id Nullable(Int32),

keyword Nullable(String),

createTime Nullable(DateTime)

)engine=MySQL('127.0.0.1:3306','test_goods','search_log','test_goods','xSGFwEXdXbY2GhZG');

编写clickhouse sql

场景一,搜索前10名商品:

jx-home :) select count() as num,keyword from search_log_mysql group by keyword order by num desc limit 10

SELECT

count() AS num,

keyword

FROM search_log_mysql

GROUP BY keyword

ORDER BY num DESC

LIMIT 10

Query id: f22cf3bf-743e-441c-ba8e-7b2351c68f10

┌─num─┬─keyword────┐

│ 151 │ Ezra Terry │

│ 150 │ Lupe Davis │

│ 148 │ Mossie Rau │

│ 148 │ Leon Wiza │

│ 147 │ Mike Haley │

│ 146 │ Luis Fahey │

│ 146 │ Oral Boyle │

│ 145 │ May Boyer │

│ 145 │ Petra Orn │

│ 145 │ Gail Doyle │

└─────┴────────────┘

10 rows in set. Elapsed: 7.469 sec. Processed 21.97 million rows, 471.47 MB (2.94 million rows/s., 63.13 MB/s.)

场景二:获取指定日期搜索排名前10商品

jx-home :)

SELECT

count() AS num,

keyword

FROM search_log_mysql

WHERE (createTime >= '2023-02-01') AND (createTime < '2023-03-01')

GROUP BY keyword

ORDER BY num DESC

LIMIT 10

SELECT

count() AS num,

keyword

FROM search_log_mysql

WHERE (createTime >= '2023-02-01') AND (createTime < '2023-03-01')

GROUP BY keyword

ORDER BY num DESC

LIMIT 10

Query id: eebf52de-7791-4a9f-bdf2-6d4ad99cd908

┌─num─┬─keyword────┐

│ 52 │ Rita Hilll │

│ 49 │ Sage Adams │

│ 48 │ Tod Veum │

│ 48 │ Oral Boyle │

│ 47 │ Isac Olson │

│ 46 │ Jade Yundt │

│ 46 │ Clark Von │

│ 46 │ Neha Ryan │

│ 46 │ Jan Bailey │

│ 45 │ Tyra Hahn │

└─────┴────────────┘

10 rows in set. Elapsed: 7.117 sec. Processed 5.13 million rows, 135.78 MB (721.03 thousand rows/s., 19.08 MB/s.)

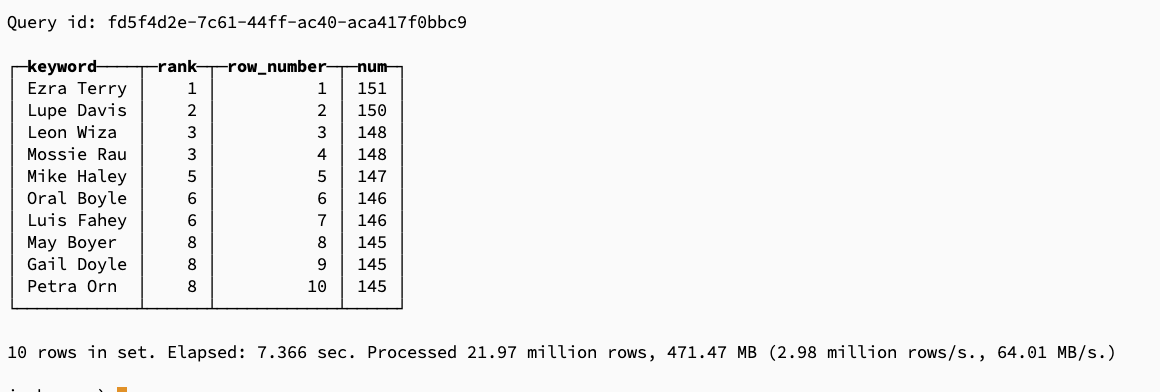

场景三,获取某一个商品名的搜索排名

首先我们需要知道一个窗口函数

select keyword,

rank() OVER w AS rank,

row_number() OVER w AS row_number,

num

from (

SELECT

count() AS num,

keyword

FROM search_log_mysql

GROUP BY keyword

ORDER BY num DESC

)

WINDOW w AS (ORDER BY num DESC range unbounded preceding) limit 10

// WINDOW w AS (PARTITION BY keyword ORDER BY num DESC range unbounded preceding) limit 10 ####PARTITION BY xxx 可以根据某个字段值进行分组做窗口处理

输出:

再进行包裹一层,获取排名

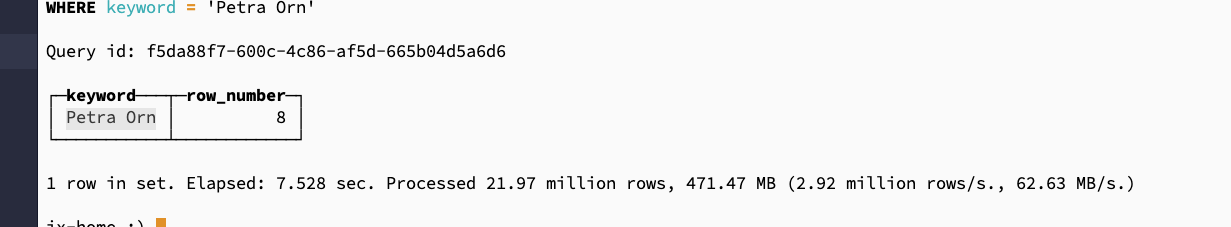

查询商品Petra Orn 的排名

SELECT

keyword,

row_number

FROM

(

SELECT

keyword,

rank() OVER w AS rank,

row_number() OVER w AS row_number,

num

FROM

(

SELECT

count() AS num,

keyword

FROM search_log_mysql

GROUP BY keyword

ORDER BY num DESC

)

WINDOW w AS (ORDER BY num DESC RANGE BETWEEN UNBOUNDED PRECEDING AND CURRENT ROW)

)

WHERE keyword = 'Petra Orn'

正文到此结束

- 本文标签: 数据库 clickhouse

- 本文链接: https://www.php20.cn/article/422

- 版权声明: 本文由仙士可原创发布,转载请遵循《署名-非商业性使用-相同方式共享 4.0 国际 (CC BY-NC-SA 4.0)》许可协议授权